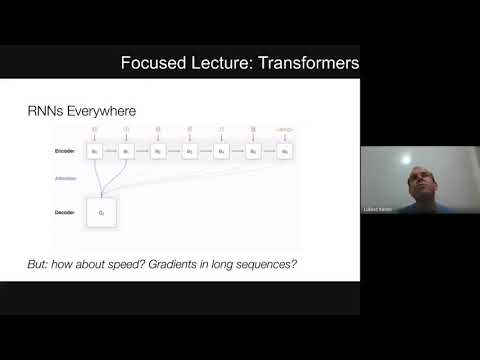

Lukasz Kaisar (Google Brain) (Jul 2020)

Transformer models revolutionized NLP by parallelizing processing and employing the self-attention mechanism, leading to faster training and more efficient long-sequence modeling. Transformers’ applications have expanded beyond NLP, showing promise in fields like time series analysis, robotics, and reinforcement learning.