Geoffrey Hinton

Geoffrey Hinton (University of Toronto Professor) - LLMs in Medicine. They Understand and Have Empathy (Dec 2023)

AI has the potential to revolutionize healthcare with improved diagnostics, personalized treatments, and assistance for cancer patients' relatives, but ethical considerations and safety concerns must be addressed. AI's cognitive abilities and consciousness remain subjects of debate, challenging traditional notions of human uniqueness and prompting discussions on rights and coexistence with machines.

Demis Hassabis, Geoffrey Hinton, Ilya Sutskevar (MITCBMM Panel Chair) - CBMM10 Panel (Nov 2023)

Neuroscience and AI offer complementary insights into intelligence, leading to advancements in technology, understanding, and a deeper comprehension of our place in the universe. Collaborative efforts between these fields explore the brain's complexities and inspire AI development, shaping our understanding of intelligence.

Geoffrey Hinton (University of Toronto Professor) - Winter School on Deep Learning (Nov 2023)

The Winter School of Deep Learning explored advancements in AI, including the forward-forward algorithm, which offers a biologically plausible approach to train neural networks and aligns with the brain's learning mechanisms. The algorithm's robustness and insensitivity to unknown transformations make it suitable for low-power hardware and applications requiring biological realism.

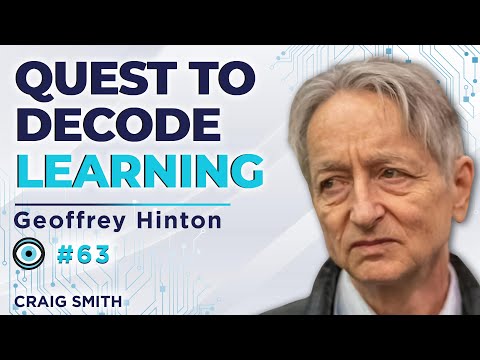

Geoffrey Hinton (University of Toronto Professor) - In conversation with the Godfather of AI (Jul 2023)

Geoffrey Hinton, a pioneer in AI, highlights progress in neural networks but emphasizes the need to address risks, biases, and existential threats posed by AI development. Hinton advocates for a balanced approach to AI development, focusing on ethical practices, human adaptability, and global cooperation in shaping an AI-augmented world.

Geoffrey Hinton (University of Toronto Professor) - Digital Intelligence versus Biological Intelligence (Jul 2023)

Digital intelligence excels in data analysis and complex computations, while biological intelligence thrives in adaptability and real-time decision-making. Data-centric, computational approaches in AI research and application have immense potential, challenging paradigms and enabling unprecedented advancements.

Geoffrey Hinton (University of Toronto Professor) - The Godfather in Conversation (Jun 2023)

Neural networks have advanced AI's capabilities in perception, motor control, and reasoning, leading to applications in various fields, but challenges remain in interpretability, data requirements, and computational demands. AI's societal impact raises ethical considerations, necessitating responsible development and control measures to mitigate potential risks and harness AI's benefits.

Geoffrey Hinton (University of Toronto Professor) - King's College, Cambridge - Entreprenuership Lab (May 2023)

Geoffrey Hinton's talk at King's College covered his academic journey, neural networks, AI's understanding and intelligence, and the path to artificial general intelligence. He emphasized the importance of desire and drive for success, cautioned against pursuing applied research solely for funding, and discussed the ethical considerations surrounding AI development.

Geoffrey Hinton (University of Toronto Professor) - S3 E9 Geoff Hinton, the "Godfather of AI", quits Google to warn of AI risks (Host (May 2023)

Digital intelligence could surpass biological intelligence in the next stage of evolution, but it poses risks that require careful consideration and management. AI's potential benefits are immense, but its alignment with human values and safety remains a critical challenge.

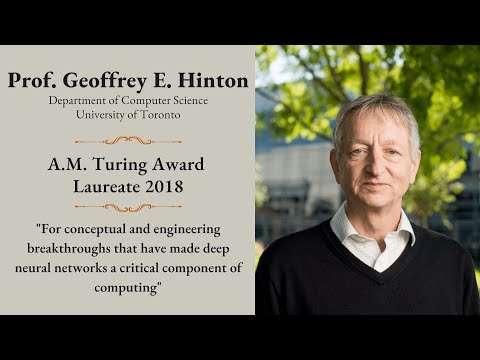

Geoffrey Hinton (U of Toronto Professor) - Two Paths to Intelligence (May 2023)

Hinton predicts the imminent surpassing of human intelligence by AI systems and underscores the urgent need for robust safety measures.

Geoffrey Hinton (University of Toronto Professor) - The Godfather of A.I. Has Some Regrets (May 2023)

Geoffrey Hinton, a pioneer in the field of AI, highlights the transformative impact of AI but cautions against unchecked progression, urging for responsible development considering ethical dilemmas and potential risks. Hinton's work on neural networks revolutionized AI, leading to broad applications, yet he emphasizes the need for ethical considerations and balanced advancement to mitigate potential negative consequences.

Geoffrey Hinton (University of Toronto Professor) - Andrew Ng Geoffrey Hinton Interview (Mar 2023)

Geoffrey Hinton's research into neural networks, backpropagation, and deep belief nets has significantly shaped the field of AI, and his insights on unsupervised learning and capsule networks offer guidance for future AI professionals. Hinton's work bridged the gap between psychological and AI views on knowledge representation and demonstrated the potential of neural networks for natural language processing and efficient inference.

Geoffrey Hinton (University of Toronto Professor) - MoroccoAI Conference 2022 Honorary Keynote Prof. Geoffrey Hinton - The Forward-Forward Algorithm (Dec 2022)

The Forward-Forward Algorithm offers an alternative to backpropagation in neural network training, particularly beneficial for analog or noisy hardware and provides insights into brain function. It also challenges the distinction between software and hardware, presenting a new hardware paradigm inspired by the brain's adaptability and continuous learning capabilities.

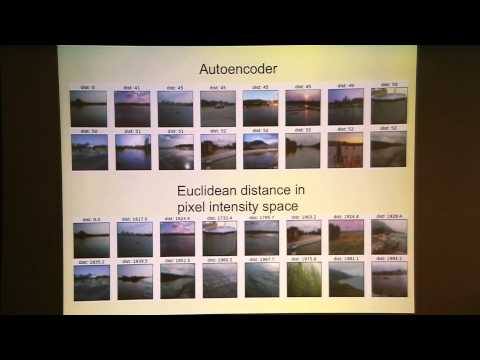

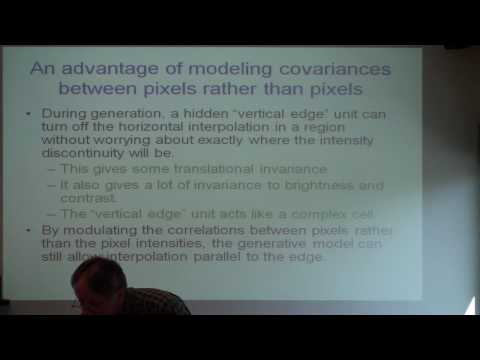

Geoffrey Hinton (University of Toronto Professor) - Deep Learning with Multiplicative Interactions (Oct 2022)

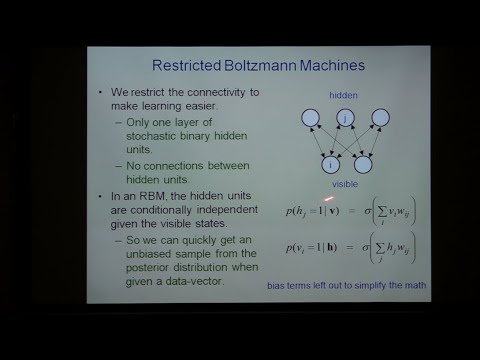

Energy-based generative models, particularly Restricted Boltzmann Machines (RBMs) and their extensions, have revolutionized unsupervised learning and generative processes, enabling the capture of complex data distributions and applications in fields like image and speech recognition. Geoff Hinton's contributions to energy-based generative models have significantly advanced unsupervised learning and opened new avenues for practical applications.

Geoffrey Hinton (University of Toronto Professor) - Can AI Be Creative? (Oct 2022)

AI has potential for exploratory and combinational creativity but may struggle with transformational creativity, which requires deep insight and understanding. AI can augment human creativity by providing suggestions, generating ideas, and identifying patterns, leading to innovative outcomes.

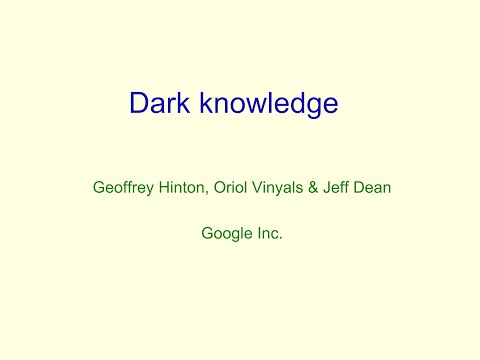

Geoffrey Hinton (University of Toronto Professor) - Distilling the Knowledge in a Neural Network (Aug 2022)

Geoffrey Hinton proposes two-phase neural network architectures for efficient training and deployment, and knowledge distillation to transfer knowledge from large models to smaller ones. Hinton's insights and methodologies in neural network training have profound implications for the future of AI and machine learning.

Geoffrey Hinton (University of Toronto Professor) - Robot Brains Podcast, Twitter Q&A (Jun 2022)

AI's practical applications range from customer service to climate change mitigation, while its ethical considerations center around responsible development and regulation. AI's evolution is marked by the pursuit of deep learning, with a focus on spiking neural networks and symbiotic intelligence.

Geoffrey Hinton (University of Toronto Professor) - WHAT IS AI? (Apr 2022)

Recent advancements in AI, particularly deep learning, have generated excitement and skepticism, prompting discussions about its limitations and the need for balanced perspectives. AI's progress has led to transformative changes in industries, while also highlighting the challenges in simulating intelligence and explaining the behavior of neural networks.

Geoffrey Hinton (University of Toronto Professor) - Adaptation at Multiple Time Scales (Feb 2022)

Geoffrey Hinton's work on deep learning has advanced AI, impacting speech recognition and object classification. Fast weights in neural networks show promise for AI development, offering a more dynamic and efficient learning environment.

Geoffrey Hinton (Google Scientific Advisor) - Distillation and the Brain (Aug 2021)

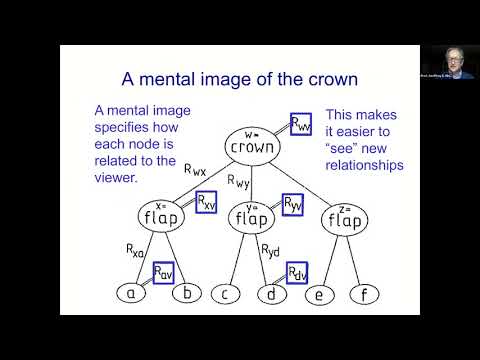

Neural Capsules theory presents a hierarchical and compositional approach to object recognition, emphasizing viewpoint-independent relationships and identity-specific embeddings. Knowledge transfer through distillation and co-distillation enables efficient training of smaller models and improves generalization.

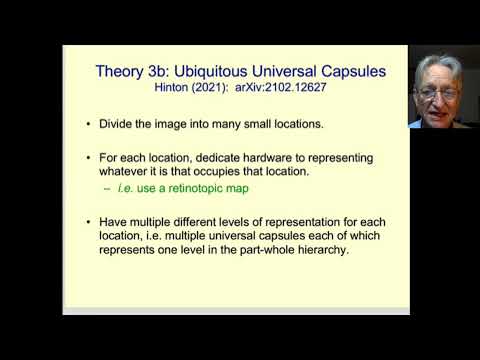

Geoffrey Hinton (Google Scientific Advisor) - How to represent part-whole hierarchies in a neural net (Jul 2021)

Geoffrey Hinton's GLOM neural network mimics human vision by using hierarchical structures and coordinate frames to process images, offering a deeper understanding of visual perception and cognition. Hinton's work also provides insights into neuroscience, bridging the gap between AI and cognitive science.

Geoffrey Hinton (Google Scientific Advisor) - Geoffrey Hinton (Mar 2021)

Neural networks have revolutionized AI, enabling machines to learn and perceive data like humans, leading to breakthroughs in fields like image recognition and machine translation. The future of AI involves exploring multiple timescales in neural networks to enhance memory and reasoning capabilities, mimicking human learning and adaptation.

Geoffrey Hinton (Google Scientific Advisor) - Part-Whole Hierarchies in Neural Networks (Mar 2021)

Geoffrey Hinton's GLOM neural network system revolutionizes object recognition by understanding variability in visual representation and spatial relationships, challenging traditional AI's tree structures. Hinton emphasizes the significance of strong beliefs in driving AI progress and expresses concern about unregulated AI's risks, particularly autonomous weapons.

Geoffrey Hinton (Google Scientific Advisor) - How to represent part whole hierarchies in a neural network | ACM SIGKDD India Chapter (Jan 2021)

Geoffrey Hinton's revolutionary ideas in neural networks, transformers, and part-whole hierarchies are transforming computer vision, pushing the boundaries of image processing and AI. Ongoing research in combining these techniques promises to further our understanding of vision systems and open new avenues for technological innovation.

Geoffrey Hinton (Google Scientific Advisor) - Geoff Hinton speaks about his latest research and the future of AI (Dec 2020)

Geoff Hinton's research in unsupervised learning, particularly capsule networks, is shaping the future of AI by seeking to understand and replicate human learning processes. Hinton's work on unsupervised learning algorithms like capsule networks and SimClear, along with his insights into contrastive learning and the relationship between AI learning systems and human brain functions, is advancing the field of AI.

Geoffrey Hinton (Google Scientific Advisor) - Turing Award Winners Keynote Event (Feb 2020)

The evolution of AI, driven by pioneers like Hinton, LeCun, and Bengio, has shifted from CNNs to self-supervised learning, addressing limitations and exploring new horizons. Advancement in AI, such as the transformer mechanism and stacked capsule autoencoders, aim to enhance perception and handling of complex inference problems.

Geoffrey Hinton (Google Scientific Advisor) - Heidelberg Laureate Forum (Jan 2020)

Geoffrey Hinton's intellectual journey, marked by early curiosity and rebellion, led him to challenge conventional norms and make groundbreaking contributions to artificial intelligence, notably in neural networks and backpropagation. Despite initial skepticism and opposition, his unwavering dedication and perseverance revolutionized the field of AI.

Geoffrey Hinton (Google Scientific Advisor) - The AI Revolution | Toronto Global Forum (Sep 2019)

Deep learning, inspired by the human brain, has revolutionized AI, leading to advancements in diverse fields, but its future depends on ethical practices, regulation, and international cooperation.

Geoffrey Hinton (Google Scientific Advisor) - Does the brain do backpropogation? (May 2019)

Geoffrey Hinton, a pioneer in deep learning, has made significant contributions to AI and neuroscience, leading to a convergence between the two fields. His work on neural networks, backpropagation, and dropout regularization has not only enhanced AI but also provided insights into understanding the human brain.

Geoffrey Hinton (Google Scientific Advisor) - Google I/O (May 2019)

Geoffrey Hinton's groundbreaking work in neural networks revolutionized AI by mimicking the brain's learning process and achieving state-of-the-art results in tasks like speech recognition and image processing. His approach, inspired by the brain, laid the foundation for modern AI and raised questions about the potential and limitations of neural networks.

Geoffrey Hinton (Google Scientific Advisor) - The Foundations of Deep Learning (Feb 2018)

Neural networks, empowered by backpropagation, have revolutionized computing, enabling machines to learn from data and adapt to various applications, influencing fields like image recognition, natural language processing, and healthcare. These networks excel in tasks that involve complex data patterns and have exceeded human performance in certain domains.

Geoffrey Hinton (Google Scientific Advisor) - The Neural Network Revolution (Jan 2018)

Neural networks have revolutionized various fields, from language translation and speech recognition to healthcare and finance, by outperforming logic-based AI systems in learning and adapting from vast data sets. They face challenges such as adversarial attacks, explainability, and regulatory compliance, but hold great promise for the future, including self-driving vehicles, personalized medicine, and a deeper understanding of human cognition.

Geoffrey Hinton (Google Scientific Advisor) - Capsule Theory talk at MIT (Nov 2017)

Capsule Networks introduce a novel approach to entity representation and structural integrity in AI models, while Convolutional Neural Networks have been influential in object recognition but face challenges in shape perception and viewpoint invariance.

Geoffrey Hinton (Google Scientific Advisor) - New York Times Interview (Nov 2017)

Geoffrey Hinton's work on neural networks, particularly Convolutional Neural Networks (CNNs), has revolutionized image recognition in AI. Hinton's novel approaches to object recognition and mental imagery propose a future where AI systems learn and reason more like humans.

Geoffrey Hinton (Google Scientific Advisor) - What is Wrong With Convolutional Neural Nets? (Sep 2017)

Capsule networks, proposed by Geoffrey Hinton, address limitations of current neural networks by representing objects as vectors with properties like shape and pose, enabling equivariance and robustness to viewpoint changes. Despite challenges, capsule networks offer a promising new direction in computer vision.

Geoffrey Hinton (Google Scientific Advisor) - What is wrong with convolutional neural nets? | Fields Institute (Sep 2017)

Geoffrey Hinton's contributions to neural networks include introducing rectified linear units (ReLUs) and developing capsule networks, which can maintain invariance to transformations and handle occlusions and noise in visual processing.Capsule networks aim to capture object properties such as coordinates, albedo, and velocity, enabling efficient representation of position, scale, orientation, and shear.

Geoffrey Hinton (Google Scientific Advisor) - Heroes of Deep Learning (Aug 2017)

Geoffrey Hinton's pioneering work in neural networks and deep learning has bridged insights from brain research to AI breakthroughs, reshaping our understanding of AI. Hinton's intellectual journey highlights the significance of interdisciplinary thinking and the relentless pursuit of innovative ideas in advancing AI.

Geoffrey Hinton (Google Scientific Advisor) - Using Fast Weights to Store Temporary Memories (Jul 2017)

Geoffrey Hinton, a pioneer in deep learning, has significantly advanced the capabilities of neural networks through his work on fast weights and their integration into recurrent neural networks. Hinton's research has opened new avenues in neural network architecture, offering more efficient and dynamic models for processing and integrating information.

Geoffrey Hinton (Google Scientific Advisor) - What's Wrong with Convolutional Neural Networks (Apr 2017)

Capsule networks, inspired by human perception, enhance neural networks with structural organization and entity representation, addressing limitations of traditional networks. Capsule networks employ concepts like coordinate frames, equivariance, and linear manifolds to improve object recognition and perception.

Geoffrey Hinton (Google Scientific Advisor) - Lecture 13/16 (Jan 2017)

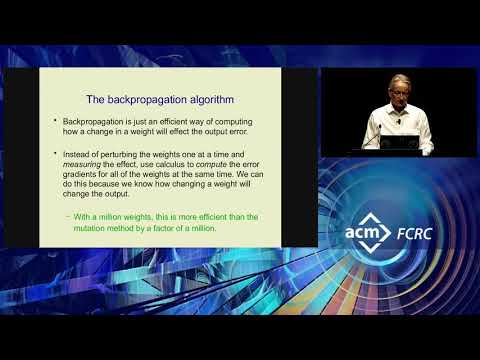

Backpropagation's initial limitations were practical, not theoretical, leading to the rise of SVMs, but deep belief nets offered a new direction. Deep belief nets were challenging to learn, but variational learning and the wake-sleep algorithm emerged as potential solutions.

Geoffrey Hinton (Google Scientific Advisor) - Lecture 4/16 (Dec 2016)

Neural networks have been used to revolutionize relational learning and language modeling, leading to advancements in natural language processing and machine learning. By capturing semantic relationships and learning from relational data, neural networks have enabled more accurate word prediction and a deeper understanding of language and its intricacies.

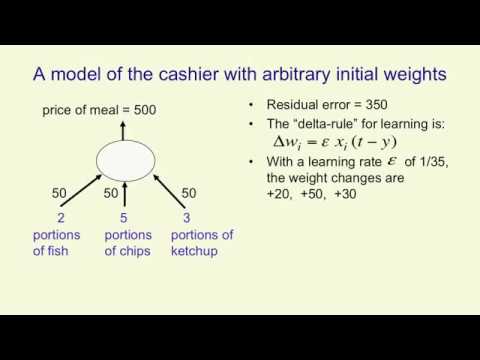

Geoffrey Hinton (Google Scientific Advisor) - Neural Networks for Machine Learning by Geoffrey Hinton (Lecture 3/16 (Dec 2016)

Neural networks use linear neurons to learn with simple weighted sums of inputs and the delta rule to adjust weights based on error. Backpropagation efficiently computes error derivatives for hidden units and weights, enabling learning in multiple layers of features.

Geoffrey Hinton (Google Scientific Advisor) - Lecture 2/16 (Dec 2016)

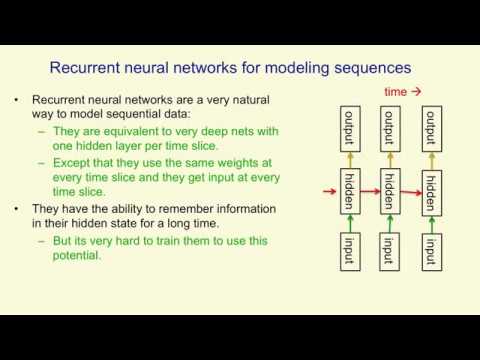

Neural networks, such as RNNs, symmetrically connected networks, and perceptrons, offer varying capabilities for processing data and feature learning, highlighting their significance in advancing artificial intelligence. Despite their limitations, neural networks continue to evolve, demonstrating remarkable proficiency in handling sequential data, pattern recognition, and feature learning.

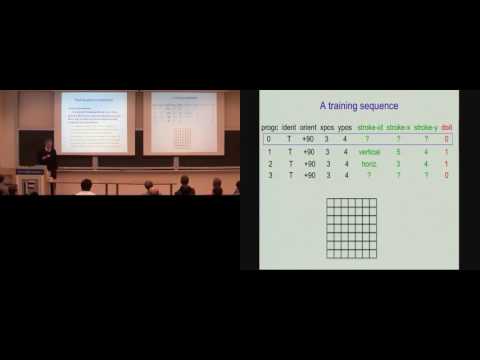

Geoffrey Hinton (Google Scientific Advisor) - Neural Networks for Machine Learning by Geoffrey Hinton (Lecture 1/16 (Dec 2016)

Machine learning, a rapidly evolving field, has revolutionized various domains by enabling computers to discern patterns, identify anomalies, and perform brain-like computations. Diverse machine learning types, ranging from supervised to unsupervised learning, address unique challenges and offer vast potential in various applications.

Geoffrey Hinton (Google Scientific Advisor) - Neural Networks for Machine Learning (Lecture 7/16 (Jul 2016)

Sequence modeling evolved from autoregressive models to LSTM networks, addressing limitations like memory capacity and long-term dependencies at the cost of computational complexity. LSTM networks excel in tasks requiring long-term memory, like handwriting recognition, due to their memory cells and gated mechanisms.

Geoffrey Hinton (Google Scientific Advisor) - Neural Networks for Language and Understanding | Creative Destruction Lab Machine Learning and Market for Intelligence conference (May 2016)

Neural networks, inspired by biological neurons, have revolutionized fields like speech and object recognition, but face challenges in achieving human-level AI, particularly in natural reasoning and understanding context.

Geoffrey Hinton (Google Scientific Advisor) - Using Backpropogration for Fine-Tuning a Generative Model | IPAM UCLA (Aug 2015)

Geoffrey Hinton's research in deep learning covers topics like training deep networks, image denoising, and the impact of unsupervised pre-training. Hinton's introduction of ReLUs marks a paradigm shift in neural network activation functions, offering computational efficiency and improved performance.

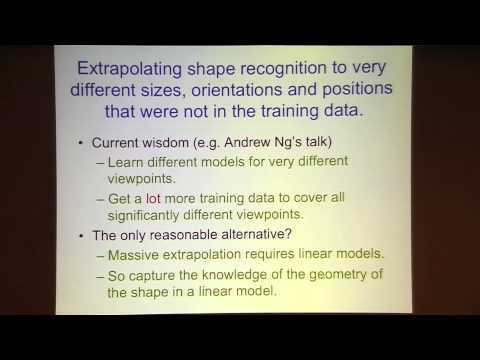

Geoffrey Hinton (Google Scientific Advisor) - Does the Brain do Inverse Graphics? (Aug 2015)

Convolutional neural networks (CNNs) lose positional information, hindering complex recognition tasks, while capsule networks retain it, enabling efficient object recognition and understanding. Capsule networks learn the 3D structure of objects, allowing for viewpoint generalization and reconstruction.

Geoffrey Hinton (Google Scientific Advisor) - A Computational Principle that Explains Sex, the Brain, and Sparse Coding (Aug 2015)

Geoffrey Hinton's work explores the use of stochastic binary spikes in neural communication and applies dropout regularization to neural networks, leading to improved generalization and insights into evolutionary aspects of neural networks.

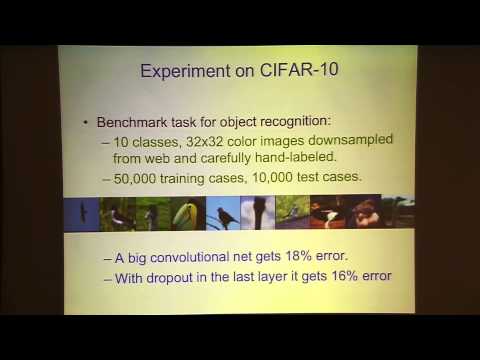

Geoffrey Hinton (Google Scientific Advisor) - Some Applications of Deep Learning | IPAM UCLA (Aug 2015)

Deep neural networks have revolutionized speech and object recognition, reducing error rates and enabling accurate predictions. Deep learning approaches have also opened new frontiers in document and image retrieval, enhancing efficiency and accuracy.

Geoffrey Hinton (University of Toronto Professor) - Recent Developments in Deep Learning | Google tech Talk (Mar 2010)

Geoff Hinton introduced Restricted Boltzmann Machines (RBMs) for constructing deep architectures, revolutionizing machine learning by enabling unsupervised learning and improving data processing capabilities. Hinton's critique of traditional machine learning methods emphasizes the importance of understanding data's underlying causal structure.

Geoffrey Hinton (University of Toronto Professor) - The Next Generation of Neural Networks | Google TechTalks (Dec 2007)

Neural networks have evolved from simple perceptrons to generative models, leading to breakthroughs in deep learning and image recognition. Generative models, like Boltzmann machines, enable efficient feature learning and data compression, while unsupervised learning methods show promise in handling large datasets with limited labeled data.